AI Magic: What It Takes To Run AI Language Models

AI is mainstream. We encounter AI-infused products every day when we talk to our digital voice assistants like Alexa and Siri, browse personalized social feeds and targeted marketing, and even use emerging tech like self-driving vehicles. We’re at the point where AI is becoming so common, it’s easy to forget how remarkable it is.

Language models, like the ones AI Dungeon and Voyage experiences are all built upon, are some of the most sophisticated and advanced forms of AI being utilized today. The AI tools you’re familiar with find patterns or trends in something that already exist. Language models use AI to generate text, meaning the AI is actually producing something completely new. AI is now creative.

The amount of computing power to build, train, and leverage an AI language model is astounding. Let’s take a deeper look at what is happening whenever a language model is used.

Compute required for training a Language Model

Language models have to be “trained”, and the process requires some of the most capable supercomputers to pull off. The larger the model, the more computing resources are required.

Language model size is measured in “parameters”. A small model might have 1.5 billion parameters. A medium model, 13 billion parameters. Large models have as many as 175 billion parameters, and there are even bigger models that aren’t currently feasible for consumer use. In theory, the more parameters a model has, the more knowledge and understanding the model has.

Computing power is measured in TFLOPS (trillions of floating-point operations). The Playstation 5 is capable of processing 10.4 TFLOPS per second. The iPhone 13 Pro Max, Apple’s top-of-the-line smartphone, is capable of 1.5 TFLOPS per second.

To give you an example of how much computer power is needed to train an AI language model, consider GPT-3, a large language model with over 175 billion parameters. According to one estimate, training the GPT-3 neural network required 311,400,000,000 TFLOPS. That’s 311 billion. With a B.

Performing this training on a V100 GPU server (which is an “affordable” supercomputer that costs over $10,000 to purchase) would theoretically take 355 years to complete. Additionally, if you rented space on this machine at the market rate of $1.50 per hour, you would end up spending $4.6 million to train the model.

The fact that language models like GPT-3 even exist is remarkable, and represents profound technological and human innovation.

Compute required to use an AI Language Model

Once the language model has been trained, it still requires intensive computing power to utilize in a game or application. In AI Dungeon, for example, we make a call to the language model every time someone types a new action into their adventure story. These actions are sent to the AI, a response is generated, and then sent back to be utilized in the AI Dungeon interface.

Let’s imagine you wanted to run AI Dungeon on a desktop computer. It’s something that’s actually been requested before, and community members even built a version (available here)! However, it’s not what you might expect. This version of AI Dungeon “is much less coherent” because it relies on a small-sized language model. Trying to run AI Dungeon’s medium-sized language models on a modern desktop computer would be like trying to play a modern 3D video game like Call of Duty on a 1990s PC running floppy disks.

In one thread, a Reddit user correctly stated, “If you want to run your own full GPT-3 (Large) instance you’ll need this $200,000 DGX A100 graphics card from NVidia. ...and you might need two of them. (5 petaflops / 320GB video memory).” In response, another user pointed out “So....maybe 3-4 generations till it's feasible at home. Not bad.”

That’s optimistic.

AI language models are becoming affordable for consumers

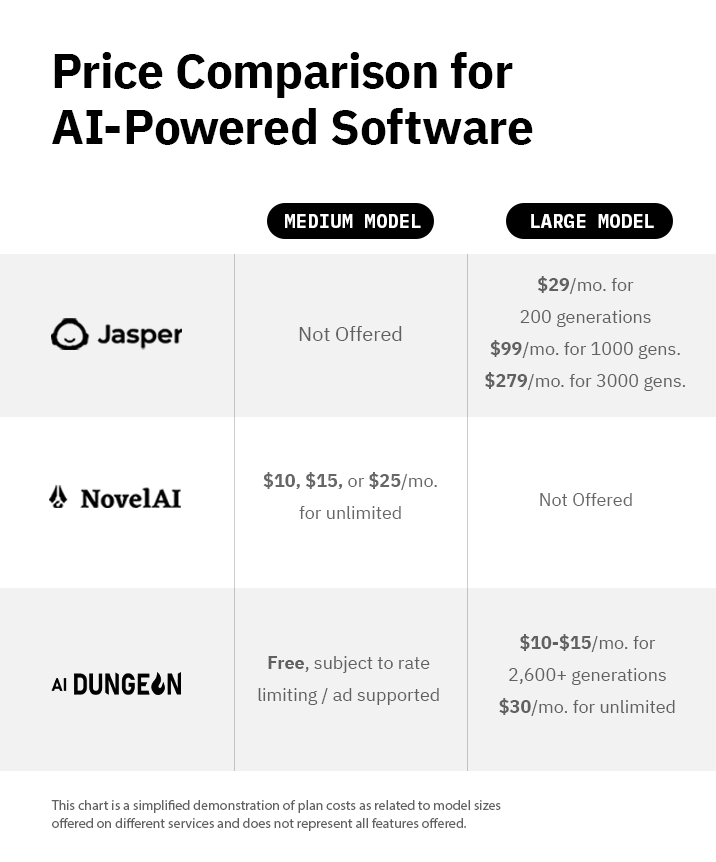

Given the costs required to train and use an AI language model, it’s surprising how many consumer-facing products exist that use language models at prices that individuals can afford.

Jasper charges $29/month for around 200 generations (assuming 100 words or tokens / generation). For 2000 generations with 100 tokens they charge $169/month for their starter package or $279/month for their advanced package.

Novel AI offers 100 generations as a free trial and has paid plans for $10, $15, or $25 a month. They utilize medium-size models (6B, 13B, and 20B) with multiple configurations.

AI Dungeon players enjoy unlimited rate limited/ad supported Medium (6B) model generations for free. By subscribing to Adventurer or Hero memberships ($10/month and $15/month respectively), players get 2600+ generations per month from our Large model. Legend members ($30/month) get unlimited generations from our Large model as well.

The future is even more exciting

AI continues to benefit from advancements in technology and innovation. Language models are becoming more affordable. Before long, AI experiences will be just as affordable as traditional games or digital experiences.

We’re preparing to launch Voyage Studio so that anyone can create AI-powered experiences. We spend significant time and energy ensuring AI usage is sustainable cost-wise for creators and players. Our experience building and growing AI Dungeon has taught us a lot about how AI-powered games can sustainably run. As performance improves and costs come down, more people will be able to experience the joy of AI-based creation tools, experiences, and games.