New AI Models and Infrastructure for AI Dungeon!

AI quality is one of the most important things users want us to improve. After several months of work, we’re releasing a new and significantly improved Wyvern 2.0 model to beta TODAY! A new Griffin model based on similar changes will follow soon after. We’d also like to share a number of significant improvements we’ve made to our AI model infrastructure to enable faster, more stable, and more continuous improvements to the AI.

New Wyvern & Griffin Models

Wyvern 2.0

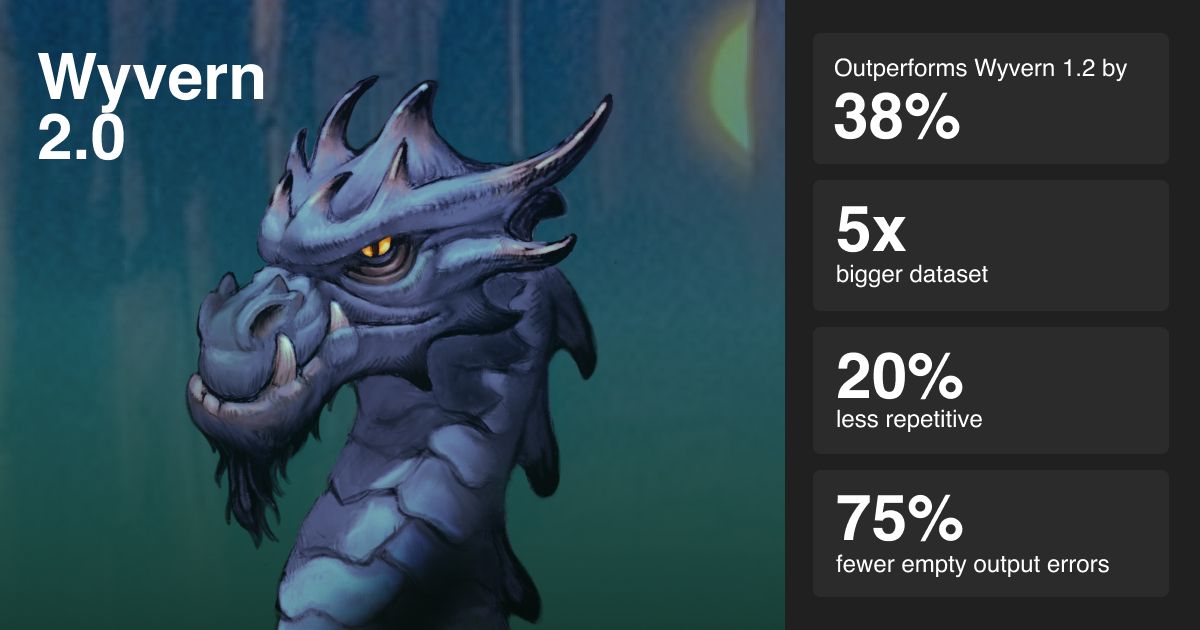

Starting today, premium users will have access to a new beta Wyvern model which they can try out by switching to "adventure-wyvern-v2.0 (beta)”. This model has a number of significant improvements, and on our “Improve the AI” tests it outperforms Wyvern 1.2 by 38%!

Wyvern 2.0 is based on a new, more intelligent foundation model from AI21. We also trained it on an expanded and more thoroughly cleaned dataset that is 5X bigger than our previous dataset.

In addition, we updated how we process and handle AI model outputs leading to a significant reduction in empty outputs and repetition. Our evaluation suite tests show 75% fewer empty output errors and a 20% drop in repetition.

Griffin 2.0

But these improvements aren’t just coming to Wyvern, we are currently polishing a new Griffin model for free users too! This new Griffin model will take advantage of many of the same improvements to make the free user experience better as well.

New AI Processes and Systems

A few months ago we talked about our plans to improve the AI. AI quality remains one of the most important areas players request improvements to.

Since then, we've done a significant overhaul of our AI model system so that we can build, evaluate, and improve AI models much faster and with much greater stability. We’ve dedicated more resources internally to continually improve the most important part of the game experience.

It’s also been unclear to players when their AI experience (good or bad) was caused by updates to the models or simply due to the randomness of the AI. We believe by better communicating AI versioning will clarify when and how the AI has changed.

In the past, we lacked evaluation systems to confidently assess whether changes improved the AI experience. With these new systems we can be much more thorough in testing new AI versions, letting us make sure that changes to the AI only improve the experience.

This will extend the review process for new models to reach production. But when we release them they will be better tested and clear evidence they improve the experience.

AI Version System

In order to improve how we communicate and evaluate AI systems, we realized that we needed to be more explicit about different AI versions and what changes they represent. An AI version system helps us communicate more clearly to users when and how the AI is changing, and also evaluate AI changes in a more systematic way.

We refactored all of our systems to be based on an “AI Version.” An AI version includes not just the model it leverages but also the code that constructs the prompt, configures the model request, transforms the outputs, and picks which output to deliver to users.

Now we can quickly create new AI versions, evaluate them in various ways, and promote them to alpha, beta, or production depending on their progress. Models will go through a more thorough and careful process before being promoted to the production experience.

AI Core System

To enable versioning of all the code involved with the AI, we re-architected our systems so that each AI version is connected to an AI “Core”. The AI Core includes all the processing functions for how we structure prompts, pass them into the model, transform the outputs, filter out bad responses, and rank which response we should deliver to the users.

With our new AI core system, we can easily test and evaluate not just different AI models but also all the code that affects how well those models are used to deliver outputs to users.

A Dedicated Team Member Improving Data Quality

Training data plays a major role in how the AI feels to players. We’ve dedicated resources to building up, cleaning, and formatting our datasets to improve AI Dungeons’ models. We now have a team member dedicated to data quality who is constantly working on improving our datasets. This has enabled us to significantly improve both the size and quality of our datasets.

Improve the AI

We’ve relied on “Train the AI” to help us evaluate AI models, but we realized we needed additional data to help us measure model quality. We’ve expanded this functionality into a new system called, “Improve the AI” that allows users to opt-in to sharing anonymous model data with us. This makes it much easier to debug problems and understand exactly where in the AI pipeline issues were happening. With Improve the AI, developers can see more clearly where problems are happening and fix them by evaluating data from users who have opted into sharing that data.

New AI Metric and Evaluation Systems

While testing with users is the best way to evaluate the AI experience, it became clear that we also need ways to quickly evaluate AI changes before testing with users.

To do this, we created an “evaluation suite,” where we pass in a large dataset of prompts into models. We then compare the results from different models across metrics like repetition, empty outputs, and more. This lets us quickly measure several aspects of AI quality, enabling us to iterate and improve the AI at a much faster pace.

New Framework for AI Quality

As we spent more time thinking about improving the AI experience, we realized what “AI quality” means can be ambiguous. We developed a 6-part framework for AI quality that we now use in evaluation and improvement processes.

These 6 parts are:

- Creativity: How creative is the AI’s writing? Is it interesting and compelling? Does it make you want to keep going to find out what might happen next?

- Style: How well does the AI fit the style a user wants? How well does it stay within a certain world or genre?

- Repetition: How repetitive are AI’s responses?

- Logic: How coherent is the AI’s writing? Does it make sense given what happened before?

- Memory: How well does the AI remember and leverage info about the world and what happened in the story’s past?

- Form: How well does the AI output things that are in good form? Are there hanging sentences, answers that are too short, etc…?

Try a New AI

Finally, we have a new system we’ll release next week called “Try a New AI.” One shortcoming with Train the AI is that because it only evaluates a single action, the measurement doesn’t help us understand how models perform over an entire adventure. It also didn’t include any way for users to give more qualitative feedback on why they liked or didn’t like an AI version.

To address this, we’re adding a new evaluation method where users can opt in to “Try a New AI.” If they do, they are randomly assigned to one of two models without knowing which one they’re on. They will be able to opt-out and switch back to their normal models at any time. When they are finished with the test, they will be asked to rate the AI across the different aspects of AI quality.

This new system will give us greater confidence in our ability to measure all the different aspects of AI quality and have a greater understanding of how different AI versions perform and how we can improve them.

We’re excited to get your feedback on the new models and will continually work on improving the AI experience to be the best it can be.